The EKS 1.33+ NetworkManager Trap: A Complete systemd-networkd Migration Guide for Rocky & Alma Linux

TL;DR:

- The Blocker: Upgrading to EKS 1.33+ is breaking worker nodes, especially on free community distributions like Rocky Linux and AlmaLinux. Boot times are spiking past 6 minutes, and nodes are failing to get IPs.

- The Root Cause: AWS is deprecating

NetworkManagerin favor ofsystemd-networkd. However, ripping out NetworkManager can leave stale VPC IPs in/etc/resolv.conf. Combined with thesystemd-resolvedstub listener (127.0.0.53) and a few configuration missteps, it causes a total internal DNS collapse where CoreDNS pods crash and burn. - The Subtext: AWS is pushing this modern networking standard hard. Subtly, this acts as a major drawback for Rocky/Alma AMIs, silently steering frustrated engineers toward Amazon Linux 2023 (AL2023) as the “easy” way out.

- The “Super Hack”: Automate the clean removal of NetworkManager, bypass the DNS stub listener by symlinking

/etc/resolv.confdirectly to thesystemduplink, and enforce strict state validation during the AMI build. (Full script below).

If you’ve been in the DevOps and SRE space long enough, you know that vendor upgrades rarely go exactly as planned. But lately, if you are running enterprise Linux distributions like Rocky Linux or AlmaLinux on AWS EKS, you might have noticed the ground silently shifting beneath your feet.

With the push to EKS 1.33+, AWS is mandating a shift toward modern, cloud-native networking standards. Specifically, they are phasing out the legacy NetworkManager in favor of systemd-networkd.

While this makes sense on paper, the transition for community distributions has been incredibly painful. AWS support couldn’t resolve our issues, and my SRE team had practically given up, officially halting our EKS upgrade process. It’s hard not to notice that this massive, undocumented friction in Rocky Linux and AlmaLinux conveniently positions AWS’s own Amazon Linux 2023 (AL2023) as the path of least resistance.

I’m hoping the incredible maintainers at free distributions like Rocky Linux and AlmaLinux take note of this architectural shift. But until the official AMIs catch up, we have to fix it ourselves. Here is the exact breakdown of the cascading failure that brought our clusters to their knees, and the “super hack” script we used to fix it.

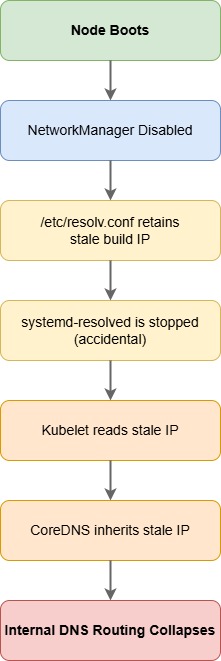

The Investigation: A Cascading SRE Failure

When our EKS 1.33+ worker nodes started booting with 6+ minute latencies or outright failing to join the cluster, I pulled apart our Rocky Linux AMIs to monitor the network startup sequence. What I found was a classic cascading failure of services, stale data, and human error.

Step 1: The Race Condition

Initially, the problem was a violent tug-of-war. NetworkManager was not correctly disabled by default, and cloud-init was still trying to invoke it. This conflicted directly with systemd-networkd, paralyzing the network stack during boot. To fix this, we initially disabled the NetworkManager service and removed it from cloud-init.

Step 2: The Stale Data Landmine

Here is where the trap snapped shut. Because NetworkManager was historically the primary service responsible for dynamically generating and updating /etc/resolv.conf, completely disabling it stopped that file from being updated.

When we baked the new AMI via Packer, /etc/resolv.conf was orphaned and preserved the old configuration—specifically, a stale .2 VPC IP address from the temporary subnet where the AMI build ran.

Step 3: The Human Element

We’ve all been there: during a stressful outage, wires get crossed. While troubleshooting the dead nodes, one of our SREs mistakenly stopped the systemd-resolved service entirely, thinking it was conflicting with something else.

Step 4: Total DNS Collapse

When the new AMI booted up and joined the EKS node group, the environment was a disaster zone:

NetworkManagerwas dead (intentional).systemd-resolvedwas stopped (accidental)./etc/resolv.confcontained a dead, stale IP address from a completely different subnet.

When kubelet started, it dutifully read the host’s broken /etc/resolv.conf and passed it up to CoreDNS. CoreDNS attempted to route traffic to the stale IP, failed, and started crash-looping. Internal DNS resolution (pod.namespace.svc.cluster.local) totally collapsed. The cluster was dead in the water.

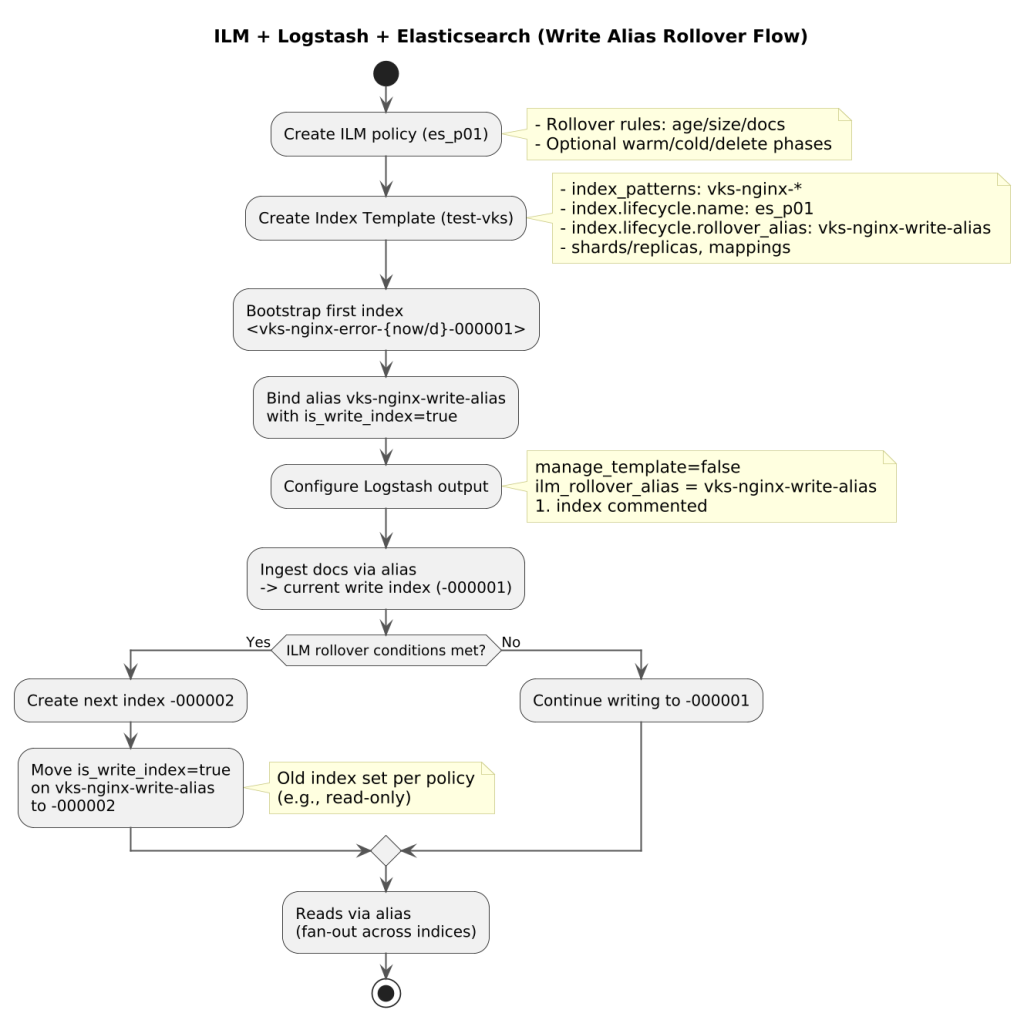

Linux Internals: How systemd Manages DNS (And Why CoreDNS Breaks)

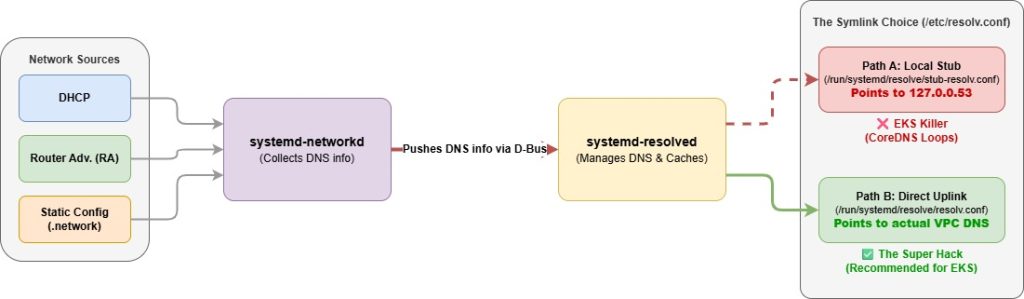

To understand how to permanently fix this, we need to look at how systemd actually handles DNS under the hood. When using systemd-networkd, resolv.conf management is handled through a strict partnership with systemd-resolved.

Here is how the flow works: systemd-networkd collects network and DNS information (from DHCP, Router Advertisements, or static configs) and pushes it to systemd-resolved via D-Bus. To manage your DNS resolution effectively, you must configure the /etc/resolv.conf symbolic link to match your desired mode of operation. You have three choices:

1. The “Recommended” Local DNS Stub (The EKS Killer)

By default, systemd recommends using systemd-resolved as a local DNS cache and manager, providing features like DNS-over-TLS and mDNS.

- The Symlink:

ln -sf /run/systemd/resolve/stub-resolv.conf /etc/resolv.conf - Contents: Points to

127.0.0.53as the only nameserver. - The Problem: This is a disaster for Kubernetes. If Kubelet passes

127.0.0.53to CoreDNS, CoreDNS queries its own loopback interface inside the pod network namespace, blackholing all cluster DNS.

2. Direct Uplink DNS (The “Super Hack” Solution)

This mode bypasses the local stub entirely. The system lists the actual upstream DNS servers (e.g., your AWS VPC nameservers) discovered by systemd-networkd directly in the file.

- The Symlink:

ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf - Contents: Lists all actual VPC DNS servers currently known to

systemd-resolved. - The Benefit: CoreDNS gets the real AWS VPC nameservers, allowing it to route external queries correctly while managing internal cluster resolution perfectly.

3. Static Configuration (Manual)

If you want to manage DNS manually without systemd modifying the file, you break the symlink and create a regular file (rm /etc/resolv.conf). While systemd-networkd still receives DNS info from DHCP, it won’t touch this file. (Not ideal for dynamic cloud environments).

The Solution: A Surgical systemd Cutover

Knowing the internals, the path forward is clear. We needed to not only remove the legacy stack but explicitly rewire the DNS resolution to the Direct Uplink to prevent the stale data trap and bypass the notorious 127.0.0.53 stub listener.

Here is the exact state we achieved:

- Lock down

cloud-initso it stops triggering legacy network services. - Completely mask

NetworkManagerto ensure it never wakes up. - Ensure

systemd-resolvedis enabled and running, but with theDNSStubListenerexplicitly disabled (DNSStubListener=no) so it doesn’t conflict with anything. - Destroy the stale

/etc/resolv.confand create a symlink to the Direct Uplink (ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf). - Reconfigure and restart

systemd-networkd.

Pro-Tip for Debugging: To ensure

systemd-networkdis successfully pushing DNS info to the resolver, verify your.networkfiles in/etc/systemd/network/. EnsureUseDNS=yes(which is the default) is set in the[DHCPv4]section. You can always runresolvectl statusto see exactly which DNS servers are currently assigned to each interface over D-Bus!

The Automation: Production AMI Prep Script

Manual hacks are great for debugging, but SRE is about repeatable automation. I wrote the following script to run during our AMI build process. It standardizes the cutover, wipes out the stale data, and includes a strict validation suite so no engineer has to manually fight this issue again.

#!/bin/bash

# ---------------------------------------------------------------------

# Author: Vamshi Krishna Santhapuri

# Script: eks-production-ami-prep.sh

# Objective: Migrate to systemd-networkd, fix stale DNS & disable Stub

# ---------------------------------------------------------------------

set -euo pipefail

log() { echo -e "\n[$(date +'%Y-%m-%dT%H:%M:%S')] $1"; }

log "Starting production network stack migration..."

# 1. Identify Primary Interface

PRIMARY_IF=$(ip route | grep default | awk '{print $5}' | head -n1)

[ -z "$PRIMARY_IF" ] && PRIMARY_IF=$(ip -o link show | awk -F': ' '{print $2}' | grep -E '^(eth|en)' | head -n1)

if [ -z "$PRIMARY_IF" ]; then

echo "[ERROR] No primary interface detected."

exit 1

fi

log "Targeting interface: $PRIMARY_IF"

# 2. Lock Cloud-Init Network Config

log "Step 1: Disabling cloud-init network management..."

sudo mkdir -p /etc/cloud/cloud.cfg.d/

echo "network: {config: disabled}" | sudo tee /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg

# 3. Deploy systemd-networkd Config

log "Step 2: Configuring systemd-networkd..."

sudo mkdir -p /etc/systemd/network/

cat <<EOF | sudo tee /etc/systemd/network/10-$PRIMARY_IF.network

[Match]

Name=$PRIMARY_IF

[Network]

DHCP=ipv4

LinkLocalAddressing=yes

IPv6AcceptRA=no

[DHCPv4]

ClientIdentifier=mac

RouteMetric=100

UseMTU=true

EOF

# 4. Disable DNS Stub for EKS/CoreDNS compatibility

log "Step 3: Disabling systemd-resolved stub listener..."

sudo mkdir -p /etc/systemd/resolved.conf.d/

cat <<EOF | sudo tee /etc/systemd/resolved.conf.d/99-eks-dns.conf

[Resolve]

DNSStubListener=no

EOF

# 5. Destroy Stale Data & Point resolv.conf to Uplink

log "Step 4: Linking /etc/resolv.conf to Uplink file (Fixing stale IPs)..."

sudo rm -f /etc/resolv.conf

sudo ln -sf /run/systemd/resolve/resolv.conf /etc/resolv.conf

# 6. Decommission NetworkManager

log "Step 5: Stopping and masking NetworkManager..."

sudo systemctl stop NetworkManager NetworkManager-wait-online.service || true

sudo systemctl mask NetworkManager NetworkManager-wait-online.service

# 7. Clean up Legacy Artifacts

log "Step 6: Purging legacy network artifacts..."

sudo rm -f /etc/NetworkManager/system-connections/*

sudo rm -f /etc/sysconfig/network-scripts/ifcfg-$PRIMARY_IF || true

sudo rm -rf /run/systemd/netif/leases/*

# 8. Restart and Enable Services

log "Step 7: Activating new network stack..."

sudo systemctl unmask systemd-networkd

sudo systemctl enable --now systemd-networkd systemd-resolved

sudo systemctl restart systemd-resolved

sudo systemctl restart systemd-networkd

sudo networkctl reconfigure "$PRIMARY_IF"

# =====================================================================

# PRODUCTION VALIDATION SUITE

# =====================================================================

log "RUNNING PRODUCTION VALIDATION..."

# CHECK 1: Ensure systemd-networkd is managing the interface

if ! networkctl status "$PRIMARY_IF" | grep -q "configured"; then

echo "[FAIL] $PRIMARY_IF is not in 'configured' state."

exit 1

fi

# CHECK 2: Ensure the Stub Listener (127.0.0.53) is DEAD

if ss -lnpt | grep -q "127.0.0.53:53"; then

echo "[FAIL] DNS Stub Listener is still active on 127.0.0.53! CoreDNS will fail."

exit 1

fi

# CHECK 3: Verify /etc/resolv.conf link integrity

if [ "$(readlink /etc/resolv.conf)" != "/run/systemd/resolve/resolv.conf" ]; then

echo "[FAIL] /etc/resolv.conf is not pointing to the uplink resolv.conf."

exit 1

fi

# CHECK 4: Verify DNS resolution is functional on the node

if ! host -W 2 google.com > /dev/null 2>&1; then

# Fallback to check if nameservers exist if internet isn't available in VPC during build

if ! grep -q "nameserver" /etc/resolv.conf; then

echo "[FAIL] No nameservers found in /etc/resolv.conf."

exit 1

fi

fi

log "SUCCESS: All production validations passed."

echo "--------------------------------------------------------"

echo "Primary IF: $PRIMARY_IF"

echo "Resolv.conf Target: $(readlink /etc/resolv.conf)"

grep "nameserver" /etc/resolv.conf

resolvectl status "$PRIMARY_IF" | grep "DNS Servers" || true

echo "--------------------------------------------------------"

The Results

By actively taking control of the systemd stack and ensuring /etc/resolv.conf was dynamically linked rather than statically abandoned, we completely unblocked our EKS 1.33+ upgrade.

More impressively, our system bootup time dropped from a crippling 6+ minutes down to under 2 minutes. We shouldn’t have to abandon fantastic, free enterprise distributions just because a cloud provider shifts their networking paradigm. If your team is struggling with AWS EKS upgrades on Rocky Linux or AlmaLinux, drop this script into your Packer pipeline and get your clusters back in the fast lane.